Related Posts

Subscribe via Email

Subscribe to our blog to get insights sent directly to your inbox.

Step into the intersection of AI and cybersecurity, and you’ll find yourself at a point that is both thrilling and risky. This post looks at the relationship between these two domains. From the OG chatbot Eliza to the modern marvel that is Chat GPT, AI's evolution has been transformative in improving cybersecurity. But we are also witnessing the unforeseen consequences of AI misuse. As AI and cybersecurity converge to shape the present and future of our digital existence, our role as humans is yet to be determined.

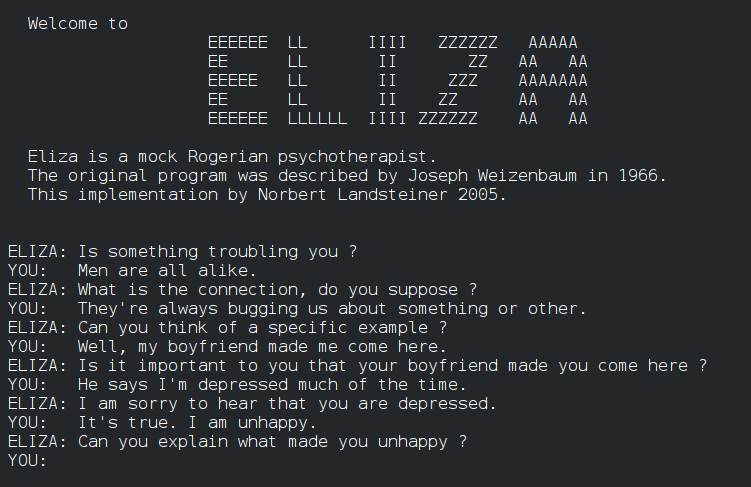

In 1966, MIT Professor Joseph Weizenbaum created the first computer program to simulate therapy conversation, modeling it as a mock Rogerian psychotherapist named Eliza. It used simple transformations of input and pattern-matching to communicate and displayed natural language processing capabilities but lacked meaningful interpretation of the data it received and mainly echoed inputs during response generation. Eliza’s impact was massive. It/she is credited with being the original chatbot and providing the starting point and inspiration for the modern LLMs (Large Language Models) and virtual assistants used today.

Image Source: ELIZA_conversation.png (751×487) (wikimedia.org)

A lot has happened between Eliza and today, including A.L.I.C.E, Siri, and many more language model iterations. The latest breakthrough that has the world buzzing is Chat GPT. In 2017, a paper titled “Attention is all you Need,” detailed a revolutionary architecture called the transformer model. It set the language processing world on fire. The transformer architecture ignited a surge in the development of novel and expansive LLMs. Amidst workshops, labs, and a plethora of LLM prototypes, emerged the gleaming Generative Pre-trained Transformer (GPT) we know and love today.

ChatGPT has changed everything. Its Google-like search interface and multilingual functionality make it easy to understand for regular people. Just like that, anyone who knows how to Google can talk directly to AI and access its limitless knowledge base.

Cybersecurity can now be more easily explained with access to best practices, resources, and helpful anecdotes. The capacity for translation between cybersecurity novice questions and cybersecurity expert answers increases analyst effectiveness and upskills newcomers far more quickly. The accessibility and clarity of security information is the largest benefit that AI currently brings to the cybersecurity space.

AI has also given relief to overworked, understaffed security teams with its ability to identify and inform teams on the need for basic system maintenance tasks, patch updates, scanning, and data input. It has also made troubleshooting more efficient and reduced MTTR with its many applications. Here are a few examples of how it’s used to enhance security.

Tying LLM AI to other AI systems can also help communicate data outputs through improved reporting and can be used as quality control over other AI systems.

Forrester recently released a report titled, Using AI for Evil. It claims that “mainstream AI-powered hacking is just a matter of time.” The same tools that empower security teams to quickly locate vulnerabilities are used by threat actors racing the clock to locate and exploit those same vulnerabilities. It then becomes an online cyber-gunfight, a standoff to see who is the quickest to the draw. There are even LLMs created just for the bad guys like the malware-friendly Worm GPT.

AI is a tool, and like any other, is as good or as damaging as the motivations of the person wielding it. Threat actors are using AI to cause harm in novel ways. Data poisoning can critically harm AI models and can go completely undetected. Misinformation has now been given a face and a voice, thanks to AI. Deep fakes and voice cloning have been developed and can fool some voice authentication and verbal passwords. It can also be used to create false evidence for doxing. Deep fake videos are also a new frontier for identity fraud and misinformation, with lip-syncing techniques and head or body swaps making it look like someone said or did something they did not do.

Combined, these advances mean that social engineering has never been so convincing. AI-generated phishing emails are experiencing higher open rates than those created by humans, and are able to target a victim’s interests, habits, and social connections.

This whiplash of chaos from AI’s powerful capabilities creates a critical urgency for governing bodies to set limits on the technology.

With all of these advancements, humans are still very much needed for their judgment, creativity, and critical thinking. While AI is an aid to detect attacks and prioritize responses, decisions cannot yet be automated. A human still must call the shots, with AI helping that human to arrive at decisions faster.

"This is because of risk," says Diana Kelley, Chief Information Security Officer (CISO) for Protect AI and special guest in Pwned episode 182. “Risk is a personal decision. There is no right or wrong answer. The situation must be contextualized for organizations' risk appetite, business requirements, etc. and AI is not there yet.”

As a result, NuHarbor Security VP Jack Danahy is optimistic that cybersecurity software developers aren’t working themselves out of their jobs by improving artificial intelligence performance. "AI eliminates the tasks, not the position. The position matures." Danahy uses the example of how with the introduction of the calculator, accountants did not become obsolete, they simply got time back to become better. "AI allows analysts and service providers to level up their game and provide more value."

We can’t talk about the future of AI and cybersecurity without talking about the perceived risks posed to humans. The CEO of OpenAI, the company behind ChatGPT, along with several engineers from the Google DeepMind AI unit, Turing award winners, and many more signed an open letter stating, "Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks, such as pandemics and nuclear war." More practically, we know that it’s already being used by threat actors for large-scale malware development. A meaningful concern is that these tools and the resulting technologies can learn and improve to the point of creating malware so powerful that it disrupts society by damaging critical infrastructure or even disabling sections of the internet.

It’s unlikely we will push pause on anything that has the potential to make our jobs easier, our systems safer, and our lives better, but we need to educate ourselves and our communities quickly if we hope to create a sustainable balance between progress and security.

To listen to all the practical applications of AI in cybersecurity, check out our podcast with cybersecurity thought leader and AI pioneer Diana Kelley.

Gartner found that 37% of companies are working on creating an AI strategy. Need help framing out yours? Consult with NuHarbor Security experts to make a plan today.

Subscribe to our blog to get insights sent directly to your inbox.